For the March 2018 development-sprint we scheduled a number of performance optimisations.

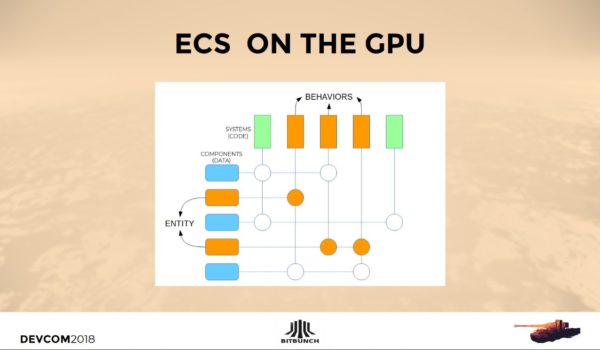

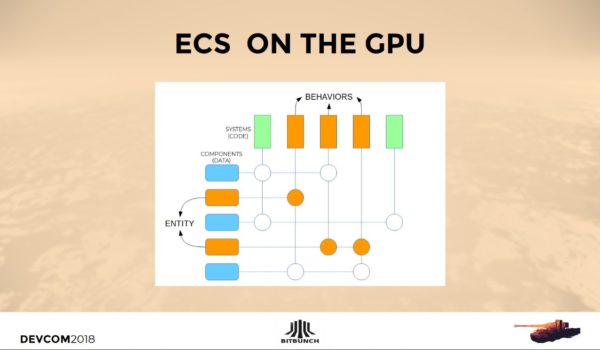

Since MilOps runs almost entirely on the GPU, every cycle spared during rendering can be put to good use in the simulation.

Traditionally a game engine loop basically looks like this:

Step 3 doesn’t look very special or interesting, but is is one of the most important steps when it comes to performance.

Uploading data to the GPU isn’t just like copying data on the CPU. A data transfer over the PCI bus is required. This means setup overhead that is unrelated to the data size and synchronisation costs that can vary considerably between consecutive transfers.

Support for pinned memory and persistent mapped buffers have improved the transfer a lot and combined with double or triple buffering, delays can be reduced. Nevertheless, it remains an expensive step that will continue to require your attention throughout development.

We have the luxury of requiring almost no transfers between CPU and GPU.

The simulation runs completely on the GPU, so preparing render data does not involve an expensive transfer. It does however require us to implement all render data extraction in a parallel fashion which means rethinking the problem completely.

I wont get into programming for massively parallel hardware architectures too much, but I’d like to address one specific aspect in this post; sorting.

When processing data, sorting is a common task. For example, which objects are close to the camera? Sort their positions and you know.

This is fine for a couple of objects, but for our scope a naive approach would run into performance issues immediately. The GPU is capable of efficient sorting. A properly implemented Radix sort can sort millions of items in a few milliseconds. The problem is, the overhead costs are quite high so this is only viable if we actually have millions of items to sort. For thousands of items the fixed overhead makes it relatively expensive.

A local sort (not using a massively parallel approach), can sort a couple of hundred items just fine. But what to do for thousands of items?

I started off applying global sorts, that use the parallel hardware, whenever I needed sorting. Profiling our kernels (GPU code), showed they amounted to more GPU time than other, must more complicated, kernels. So, they needed to be optimised.

Investigating the actual tasks we needed sorting for, gave the solution. It turned out that in most cases we do not actually need sorting, just “grouping”. And if we really needed to sort, for example sorting back-to-front for blending, we can first discard candidates using other techniques before doing a local sort over the remaining items that were actually rendered.

Generally when preparing render-data we have the following sorting situations:

Next, an example of each case.

In the traditional CPU vs GPU scenario, instanced rendering would be prepared by determining the objects that need rendering, then filling a buffer containing render commands (indirect-structs in OpenGL) that refer to the mesh data. Every frame, the order of the indirect-structs in the buffer could be different also because some models may not be rendered at all. Sorting would be used to make sure the vertex attributes are in the order that match the indirect-structs.

For the GPGPU approach, we do it a little different. At start-up we prepare a buffer once, containing indirect-structs, each representing a mesh. During the render-prepare step each frame, we determine the instance count for each mesh. When rendering, we render all indirect-structs. If a mesh didn’t need to be rendered, we simply set its instance-count in the indirect-struct to zero.

This way we know the order in which meshes will be rendered. That means we can do the following; In the prepare-instance-mesh-rendering kernel, we go over all objects in the simulation that could potentially be rendered (in parallel, in our case launching 500K+ threads or kernel-instances). We cull, determine LOD and perform any task that can cause a mesh instance to be discarded for rendering. When done, we know how many instanced of each mesh needs rendering.

Next, we do the same again, culling, LOD, everything. But this time we already know all the offsets because we know the end result. So we can write all vertex-attribute render data for the objects that are processed. For example; we just need to claim any slot for instance 34 of mesh 635 in the range where the indirect-structs will expect data for instances of mesh 635. No problem, after all we know how many instances of all meshes before mesh 635 will be stored.

Now it may seem wasteful to do culling twice. But this is a good example of the many instances where what may have been a good idea for procedural programming, does not apply to massively parallel computing. The culling and LOD operations are not complex, kernels contain no branching of consequence and the memory access patterns are straight forward, predictable and without collisions. This is what a GPU is good at.

How fast is this? Very fast! Our rendering budget on the GPU is small, only 6 milliseconds and that is including the background processing and rasterisation that is being performed to generate terrain tiles when moving around. We need the budget to be this low to allow the 100K units to be simulated at accelerated simulation-speeds.

The sound engine we use is pretty good at handling 64 concurrent sounds. But it would never be able to handle 200K concurrent sounds. How do we get from 200,000 to 64?

Culling helps a bit, but contrary to meshes, we should still hear sounds behind the camera or explosions far away. The important thing for sounds is that I want the user to hear at least the sounds that still have the most energy left when arriving at the listeners (camera) location. So distance is not the (only) metric in this case.

We also know that if there are a lot of loud noises near the listener it will be hard to distinguish all of them.

Using these characteristics we run a kernel, that goes over all playing sounds, 4 times. Every time a different min/max energy range is regarded. For the first run we only want the really loud noises, we want to make sure to have those. The next run we look at the next range and so on.

We may run out of our sounds budget during the second run. But that is ok because that means we already have 64 loud noises and the ones that were audible but not included during the second run, would for sure be overpowered by the louder 64.

The important thing to make sure of is that we have enough budget for at least all sounds in the first energy range. Otherwise we could be discarding a very loud noise, louder than any other that was actually included. Since the order of thread execution and completion is unpredictable, different loud noises would be included or excluded every frame which would be very disturbing.

The same thing happens with rendered objects that reach the render budget. Every frame different objects would be included/excluded resulting in erratic jumping of objects in view.

For a full blown battle arena there are up to 25000 units. That means, 25000 potential icons on screen.

We devised a metric to make sure the important icons push away less important ones. We use the camera position/altitude, the unit-size and a number of additional properties to determine an icon’s importance. Based on that importance icons get drawn smaller and ultimately fade out (blending) when they are overlapped by icons with a higher importance. Since we use actual blending we need to do a Z-sort. Because of the importance determination metrics we are able to discard all icons that do not fit on screen. There are only so much icons that fit. So at the time we need to do the sort we have a small enough set of icons to do a local (not parallel) sort.

For (preparing) rendering we don’t need any global sort. I was able to remove all the sorts I initially used. That did a lot for performance!

The simulation still uses a global sort for the sensor scan implementation. And this will remain the fastest way since the simulation is persistent and independent of the user’s (camera) location. So there is no way to reduce the dataset like we did for rendering and audio.

Developing for the GPU is a bit like learning to program al over, But the rewards are more than worth it. After a couple of years you may actually learn to prefer it, like we do 🙂

Comments and reactions to this blog entry can be made on our forum.

Share: